# Run this cell to get everything set up.

from lec_utils import *

import lec22_util as util

diabetes = pd.read_csv('data/diabetes.csv')

from sklearn.model_selection import train_test_split

diabetes = diabetes[(diabetes['Glucose'] > 0) & (diabetes['BMI'] > 0)]

X_train, X_test, y_train, y_test = (

train_test_split(diabetes[['Glucose', 'BMI']], diabetes['Outcome'], random_state=1)

)

Lecture 22¶

Logistic Regression¶

EECS 398: Practical Data Science, Spring 2025¶

practicaldsc.org • github.com/practicaldsc/sp25 • 📣 See latest announcements here on Ed

Agenda 📆¶

- Predicting probabilities.

- Cross-entropy loss.

- From probabilities to decisions.

Check out this Decision Boundary Visualizer, which allows you to visualize decision boundaries for different classifiers.

Question 🤔 (Answer at practicaldsc.org/q)

Remember that you can always ask questions anonymously at the link above!

Predicting probabilities 🎲¶

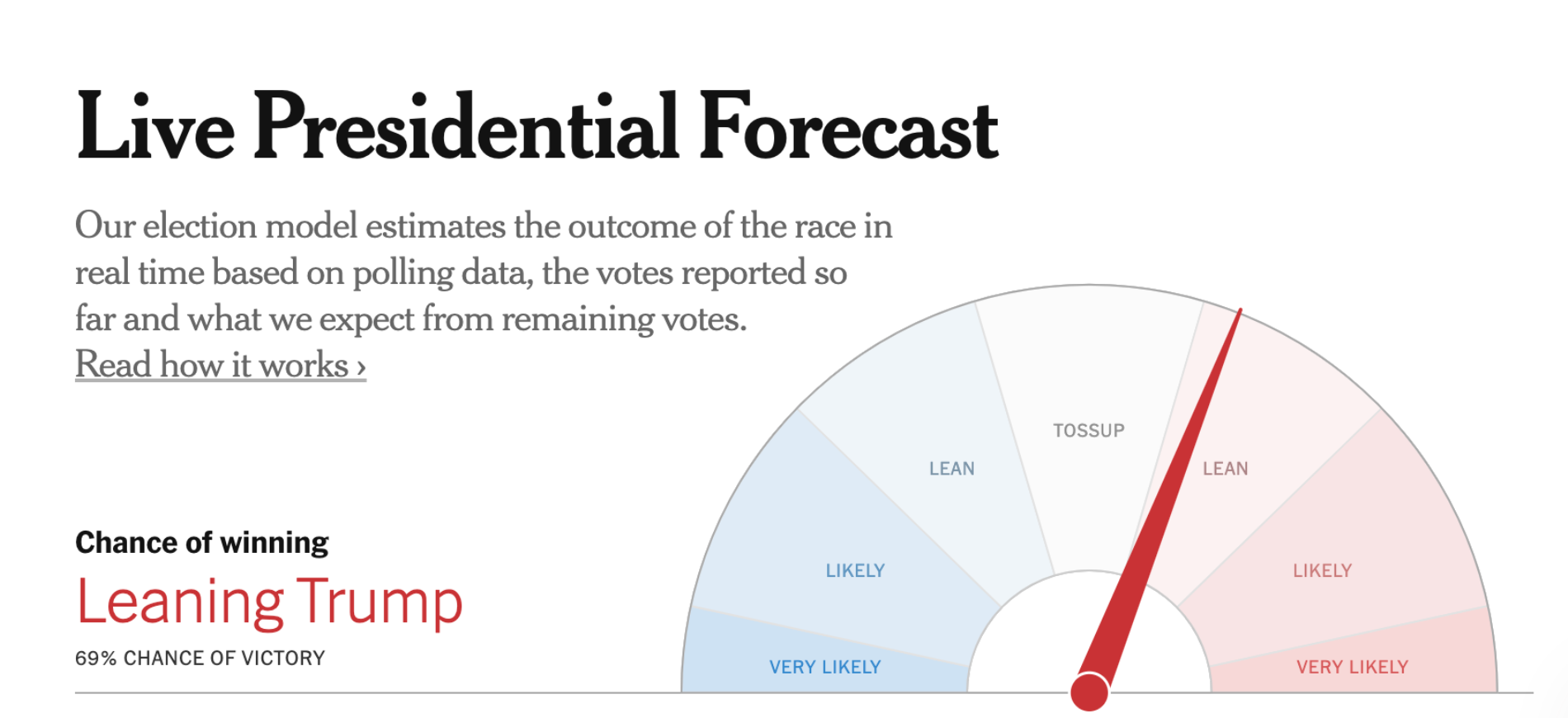

The New York Times maintained needles

that displayed the probabilities of various outcomes in the election.

Motivation: Predicting probabilities¶

- Often, we're interested in predicting the probability of an event occurring, given some other information.

what's the probability that Michigan wins?

In the context of weather apps, this is a nuanced question; here's a meme about it.

- If we're able to predict the probability of an event, we can classify the event by using a threshold.

For example, if we predict there's a 70% chance of Michigan winning, we could predict that Michigan will win. Here, we implicitly used a threshold of 50%.

- The two classification techniques we've seen so far – $k$-nearest neighbors and decision trees – don't directly use probabilities in their decision-making process.

But sometimes it's helpful to model uncertainty and to be able to state a level of confidence along with a prediction!

Recap: Predicting diabetes¶

- As before, class 0 (orange) is "no diabetes" and class 1 (blue) is "diabetes".

util.create_base_scatter(X_train, y_train)

- Let's try to predict whether or not a patient has diabetes (

'Outcome') given just their'Glucose'level.

Last class, we used both'Glucose'and'BMI'; we'll start with just one feature for now.

util.show_one_feature_plot(X_train, y_train)

- It seems that as a patient's

'Glucose'value increases, the chances they have diabetes also increases.

- Can we model this probability directly, as a function of

'Glucose'?

In other words, can we find some $f$ such that:

An attempt to predict probabilities¶

- Let's try and fit a simple linear model to the data from the previous slide.

util.show_one_feature_plot_with_linear_model(X_train, y_train)

- The simple linear model above predicts values greater than 1 and less than 0! This means we can't interpret the outputs as probabilities.

- We could, technically, clip the outputs of the linear model:

util.show_one_feature_plot_with_linear_model_clipped(X_train, y_train)

Bins and proportions¶

- Another approach we could try is to:

- Place

'Glucose'values into bins, e.g. 50 to 55, 55 to 60, 60 to 65, etc. - Within each bin, compute the proportion of patients in the training set who had diabetes.

- Place

# Take a look at the source code in lec22_util.py to see how we did this!

# We've hidden a lot of the plotting code in the notebook to make it cleaner.

util.make_prop_plot(X_train, y_train)

- For example, the point near a

'Glucose'value of 100 has a $y$-axis value of ~0.25. This means that about 25% of patients with a'Glucose'value near 100 had diabetes in the training set.

- So, if a new person comes along with a

'Glucose'value near 100, we'd predict there's a 25% chance they have diabetes (so they likely do not)!

- Notice that the points form an S-shaped curve!

Can we incorporate this S-shaped curve in how we predict probabilities?

The logistic function¶

The logistic function resembles an $S$-shape.

$$\sigma(t) = \frac{1}{1 + e^{-t}} = \frac{1}{1 + \text{exp}(-t)}$$

The logistic function is an example of a sigmoid function, which is the general term for an S-shaped function. Sometimes, we use the terms "logistic function" and "sigmoid function" interchangeably.

- Below, we'll look at the shape of $y = \sigma(w_0 + w_1 x)$ for different values of $w_0$ and $w_1$.

- $w_0$ controls the position of the curve on the $x$-axis.

- $w_1$ controls the "steepness" of the curve.

util.show_three_sigmoids()

- Notice that $0 < \sigma(t) < 1$, for all $t$, which means we can interpret the outputs of $\sigma(t)$ as probabilities!

Below, interact with the sliders to change the values of $w_0$ and $w_1$.

interact(util.plot_sigmoid, w0=(-15, 15), w1=(-3, 3, 0.1));

interactive(children=(IntSlider(value=0, description='w0', max=15, min=-15), FloatSlider(value=0.0, descriptio…

Logistic regression¶

- Logistic regression is a linear classification technique that builds upon linear regression.

It is not called logistical regression!

- It models the probability of belonging to class 1, given a feature vector:

- Note that the existence of coefficients, $w_0, w_1, ... w_d$, that we need to learn from the data, tells us that logistic regression is a parametric method!

LogisticRegression in sklearn¶

from sklearn.linear_model import LogisticRegression

- Let's fit a

LogisticRegressionclassifier. Specifically, this means we're askingsklearnto learn the optimal parameters $w_0^*$ and $w_1^*$ in:

model_logistic = LogisticRegression()

model_logistic.fit(X_train[['Glucose']], y_train)

LogisticRegression()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LogisticRegression()

- We get a test accuracy that's roughly in line with the test accuracies of the two models we saw last class.

model_logistic.score(X_test[['Glucose']], y_test)

0.75

- What does our fit model look like?

Visualizing a fit logistic regression model¶

- The values of $w_0^*$ and $w_1^*$

sklearnfound are below.

model_logistic.intercept_[0], model_logistic.coef_[0][0]

(-5.594502656264591, 0.039958336242438434)

- So, our fit model is:

util.show_one_feature_plot_with_logistic(X_train, y_train)

- So, if a patient has a

'Glucose'level of 150, the model's predicted probability that they have diabetes is:

model_logistic.predict_proba([[150]])

array([[0.4, 0.6]])

sklearn find $w_0^*$ and $w_1^*$?What loss function did it use?

Cross-entropy loss¶

The modeling recipe¶

- To train a parametric model, we always follow the same three steps.

$k$-Nearest Neighbors and decision trees didn't quite follow the same process.

- Choose a model.

- Choose a loss function.

- Minimize average loss to find optimal model parameters.

As we've now seen, average loss could also be regularized!

Attempting to use squared loss¶

- Our default loss function has always been squared loss, so we could try and use it here.

- Unfortunately, there's no closed form solution for $\vec{w}^*$, so we'll need to use gradient descent.

- Before doing so, let's visualize the loss surface in the case of our "simple" logistic model:

- Specifically, we'll visualize:

util.show_logistic_mse_surface(X_train, y_train)

- What do you notice?

Mean squared error doesn't work well with logistic regression!¶

- The following function is not convex:

- There are two flat "valleys" with gradients near 0, where gradient descent could get trapped.

Additionally, squared loss doesn't penalize bad predictions nearly enough. The largest possible value of:

$$\left( y_i - \sigma\left(\vec{w} \cdot \text{Aug}(\vec{x}_i) \right) \right)^2$$

is 1, since both $y_i$ and $\sigma\left(\vec{w} \cdot \text{Aug}(\vec{x}_i) \right)$ are bounded between 0 and 1, and $(1 - 0)^2 = 1$.

- Suppose $y_i = 1$. Then, the graph of the squared loss of the prediction $p_i$ is below.

util.show_squared_loss_individual()

- Predicted $p_i$ values near 0 are really bad, since $y_i = 1$, but the loss for $p_i = 0$ is not very high.

- It seems like we need a loss function that more steeply penalizes incorrect probability predictions – and hopefully, one that is convex for the logistic regression model!

Cross-entropy loss¶

- A common loss function in this setting is log loss, i.e. cross-entropy loss.

The term "entropy" comes from information theory. Watch this short video for more details.

- We can define the cross-entropy loss function piecewise. If $y_i$ is an observed value and $p_i$ is a predicted probability, then:

- Note that in the two cases – $y_i = 1$ and $y_i = 0$ – the cross-entropy loss function resembles squared loss, but is unbounded when the predicted probabilities $p_i$ are far from $y_i$.

util.show_ce_loss_individual_1()

util.show_ce_loss_individual_0()

A non-piecewise definition of cross-entropy loss¶

- We can define the cross-entropy loss function piecewise. If $y_i$ is an observed value and $p_i$ is a predicted probability, then:

- An equivalent formulation of $L_\text{ce}$ that isn't piecewise is:

- This formulation is easier to work with algebraically!

Average cross-entropy loss¶

- Cross-entropy loss for an observed value $y_i$ and predicted probability $p_i = P(y_i = 1 | \vec{x}_i) = \sigma \left(\vec w \cdot \text{Aug}(\vec x_i) \right)$ is:

- To find $\vec{w}^*$, then, we minimize average cross-entropy loss:

- Cross-entropy loss is the default loss function used to find optimal parameters in logistic regression.

- There's still no closed-form solution for $\vec{w}^* = \underset{\vec{w}}{\text{argmin}} \: R_\text{ce}(\vec{w})$, so we'll need to use gradient descent, or some other numerical method.

But don't worry – we'll leave this tosklearn!

- Fortunately, average cross-entropy loss is convex, too.

util.show_logistic_ce_surface(X_train, y_train)

- And, it can be regularized!

By default,sklearnapplies regularization when performing logistic regression.

util.show_logistic_ce_surface(X_train, y_train, reg_lambda=0.5)

The modeling recipe, revisited¶

- Choose a model.

- Choose a loss function.

Minimize average loss to find optimal model parameters.

As we've now seen, average loss could also be regularized!\begin{align*}R_\text{ce}(\vec{w}) &= - \frac{1}{n} \sum_{i = 1}^n \left( y_i \log p_i + (1 - y_i) \log (1 - p_i) \right) \\ &= - \frac{1}{n} \sum_{i = 1}^n \left[ y_i \log \left( \sigma \left(\vec{w} \cdot \text{Aug}(\vec{x}_i) \right) \right) + (1 - y_i) \log \left(1 - \sigma\left(\vec{w} \cdot \text{Aug}(\vec{x}_i) \right)\right) \right]\end{align*}

The actual minimization here is done using numerical methods, through

sklearn.

LogisticRegression in sklearn, revisited¶

- The

LogisticRegressionclass insklearnhas a lot of hidden, default hyperparameters.

LogisticRegression?

- It performs $L_2$ regularization ("ridge logistic regression") by default. The hyperparameter for regularization strength, $C$, is the inverse of $\lambda$; by default, it sets $C = 1$.

- So, for a given value of $C$, it minimizes:

- It also specifies

solver='lbfgs', i.e. it doesn't use gradient descent per-se, but another more sophisticated numerical method.

Read more about LBFGS here.

By default, logistic regression in scikit-learn runs w L2 regularization on and defaulting to magic number C=1.0. How many millions of ML/stats/data-mining papers have been written by authors who didn't report (& honestly didn't think they were) using regularization?

— Zachary Lipton (@zacharylipton) August 30, 2019

Question 🤔 (Answer at practicaldsc.org/q)

What questions do you have?

From probabilities to decisions¶

Predicting probabilities vs. predicting classes¶

- 🤔 Question: Suppose our logistic regression model predicts the probability that someone has diabetes is 0.75. What do we predict – diabetes or no diabetes? What if the predicted probability is 0.3?

- 🙋 Answer: We have to pick a threshold (for example, 0.5)!

- If the predicted probability is above the threshold, we predict diabetes (1).

- Otherwise, we predict no diabetes (0).

Predicting probabilities vs. predicting classes¶

- By default, the

predictmethod of a fitLogisticRegressionmodel predicts a class.

model_logistic.predict(pd.DataFrame([{

'Glucose': 150,

}]))

array([1])

- But, logistic regression is designed to predict probabilities. We can access these predicted probabilities using the

predict_probamethod, as we saw earlier.

model_logistic.predict_proba(pd.DataFrame([{

'Glucose': 150,

}]))

array([[0.4, 0.6]])

- The above is telling us that the model thinks this person has:

- A 40% chance of belonging to class 0 (no diabetes).

- A 60% chance of belonging to class 1 (diabetes).

- By default, it uses a threshold of 0.5, i.e. it predicts the larger probability.

As we'll discuss next class, this may not be what we want!

Unfortunately,sklearndoesn't let us change the threshold ourselves. If we want a different threshold, we need to manually implement it using the results ofpredict_proba.

Thresholding probabilities¶

- As we did with other classifiers, we can visualize the decision boundary of a fit logistic regression model.

If we pick a threshold of $T$, any patient with a

'Glucose'value such that:$$\sigma(w_0^* + w_1^* \cdot \text{Glucose}_i) \geq T$$

is classified as having diabetes.

- For example, if $T = 0.5$:

util.show_one_feature_plot_with_logistic_and_y_threshold(X_train, y_train, 0.5)

- If we set $T = 0.5$, then patients with

'Glucose'values above $\approx$ 140 are classified as having diabetes.

util.show_one_feature_plot_with_logistic_and_x_threshold(X_train, y_train, 0.5)

- How do we find the exact $x$-axis position of the decision boundary above?

If we can, then we'd be able to predict whether someone has diabetes just by looking at their'Glucose'value.

Decision boundaries for logistic regression¶

- In our single feature model that predicts

'Outcome'given just'Glucose', our predicted probabilities are of the form:

- Suppose we fix a threshold, $T$. Then, our decision boundary is of the form:

- If we can invert $\sigma(t)$, then we can re-arrange the above to solve for the

'Glucose'value at the threshold:

- Important: If $p = \sigma(t)$, then $\sigma^{-1}({p}) = \log \left( \frac{p}{1-p} \right)$ is the inverse of $\sigma(t)$.

$\sigma^{-1}(p)$ is called the logit function.

Aside: Odds¶

- Suppose an event occurs with probability $p$.

- The odds of that event are:

For instance, if there's a $p = \frac{3}{4}$ chance that Michigan wins this week, then the odds that Michigan wins this week are:

$$\text{odds} \left( \frac{3}{4} \right) = \frac{\frac{3}{4}}{\frac{1}{4}} = 3$$

- Interpretation: it's 3 times more likely that Michigan wins than loses.

- We can interpret $\sigma^{-1}(p) = \log \left( \frac{p}{1-p} \right)$ as the "log odds" of $p$!

See the reference slides for more details.

Solving for the decision boundary¶

- Previously, we said that if we pick a threshold $T$, then:

- We re-arranged this for the

'Glucose'value on the threshold, $\text{Glucose}_T$:

- Using the fact that $\sigma^{-1}(T) = \log \left( \frac{T}{1 - T} \right)$ gives us a closed-form formula for $\text{Glucose}_T$!

- This explains why $\text{Glucose} \geq 140$ is the decision boundary below!

w0_star = model_logistic.intercept_[0]

w1_star = model_logistic.coef_[0][0]

T = 0.5

glucose_threshold = (np.log(T / (1 - T)) - w0_star) / w1_star

glucose_threshold

140.0083983057046

util.show_one_feature_plot_with_logistic_and_x_threshold(X_train, y_train, 0.5)

The decision boundary in the feature space¶

- The decision boundary on the previous slide is:

- Let's visualize this in the feature space. We are just using $d = 1$ feature, so let's visualize our decision boundary with a 1D plot, i.e. a number line.

util.show_one_feature_plot_in_1D(X_train, y_train, 0.5)

Logistic regression with multiple features¶

- Now, as we did last class, let's use both

'Glucose'and'BMI'to predict diabetes.

util.create_base_scatter(X_train, y_train)

- Specifically, our fit model will look like:

model_logistic_multiple = LogisticRegression()

model_logistic_multiple.fit(X_train, y_train)

LogisticRegression()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LogisticRegression()

- After minimizing mean (regularized!) cross-entropy loss, we find that our fit model is of the form:

model_logistic_multiple.intercept_, model_logistic_multiple.coef_

(array([-7.85]), array([[0.04, 0.08]]))

Visualizing a fit logistic regression model with two features¶

- Recall, the logistic regression model is trained to predict the probability of class 1 (diabetes).

- The graph below shows the predicted probabilities of class 1 (diabetes) for different combinations of features.

util.show_logistic(model_logistic_multiple, X_train, y_train)

The decision boundary in the feature space¶

- What does the resulting decision boundary look like, in a $d = 2$ dimensional plot?

util.show_decision_boundary(model_logistic_multiple, X_train, y_train, title='Logistic Regression Decision Boundary (T = 0.5)')

- Note that unlike the decision boundaries for $k$-nearest neighbors and decision trees, this decision boundary is linear.

- Specifically, the decision boundary in the feature space is of the form:

- In Homework 11 (released later this week), you'll solve for $a$, $b$, and $c$ in a similar example!

It involves retracing the steps we followed in the single-feature case.

Question 🤔 (Answer at practicaldsc.org/q)

What questions do you have?

- The logistic function, $\sigma(t)$, obeys several interesting properties.

- It is symmetric.

- Its derivative is conveniently calculated:

- But, most relevant to us right now, its inverse is:

- Let $p$ represent our predicted probability.

- Using the inverse of the logistic function, we have that:

- On the left, we have a linear function of $\text{Glucose}_i$.

- On the right, we have the log of the odds of $p$.

We call the "log of the odds" the "log odds".

- Important: The logistic regression model assumes that the log of the odds of $P(y_i = 1 | \vec{x}_i)$ is linear!

- Suppose that $w_0^* = -6$ and $w_1^* = 0.05$. Then:

- It's hard to interpret the role of the coefficient $0.05$ directly. But, we know that:

- Example: Suppose my

'Glucose'level increases by 1 unit. Then, the predicted log odds that I have diabetes increases by 0.05.

- But, since:

- And:

- We can say that if my

'Glucose'level increases by 1 unit, then my predicted odds of diabetes increases by a factor of $e^{0.05}$, or more generally $e^{w_1^*}$.

- You'll need this interpretation in Homework 11!

Question 🤔 (Answer at practicaldsc.org/q)

Which expression describes the odds ratio, $$\frac{P(y_i = 1 | \vec{x}_i)}{P(y_i = 0 | \vec{x}_i)}$$

in the logistic regression model?

- A. $\vec{w} \cdot \text{Aug}(\vec{x}_i)$

- B. $-\vec{w} \cdot \text{Aug}(\vec{x}_i)$

- C. $e^{\vec{w} \cdot \text{Aug}(\vec{x}_i)}$

- D. $\sigma(\vec{w} \cdot \text{Aug}(\vec{x}_i))$

- E. None of the above.