# Run this cell to get everything set up.

from lec_utils import *

import lec25_util as util

diabetes = pd.read_csv('data/diabetes.csv')

from sklearn.model_selection import train_test_split

diabetes = diabetes[(diabetes['Glucose'] > 0) & (diabetes['BMI'] > 0)]

X_train, X_test, y_train, y_test = (

train_test_split(diabetes[['Glucose', 'BMI']], diabetes['Outcome'], random_state=11)

)

from ipywidgets import interact

import warnings

warnings.simplefilter('ignore')

Announcements 📣¶

Homework 10 is out, and is due on Monday, December 2nd.

Homework 11 will be out soon, and will be due on Thursday, December 5th.

It'll be fully autograded, with no hidden tests, and have just three questions.The Portfolio Homework is due on Saturday, December 7th – no slip days allowed!

We'll release checkpoint grades today; thanks for getting those in on time.Consider entering the Big Ten Data Viz Championship. Submissions are due on January 15th. Read more here.

Help Michigan defend its title!Some suggested courses for next semester can be found in #306 on Ed.

And please help spread the word about 398!

Agenda¶

- Logistic regression.

- Recap.

- Choosing a threshold.

- Linear separability.

- Multiclass classification.

Question 🤔 (Answer at practicaldsc.org/q)

Remember that you can always ask questions anonymously at the link above!

Recap: Logistic regression¶

Logistic regression¶

- Logistic regression is a linear classification technique that builds upon linear regression.

- It models the probability of belonging to class 1, given a feature vector:

- Suppose we train a logistic regression model to predict the probability a patient has diabetes ($y = 1$) given their

'Glucose'and'BMI'. The optimal parameters we found were $\vec{w}^* = \begin{bmatrix} -8.1697 & 0.0394 & 0.0802 \end{bmatrix}^T$. To predict probabilities, then, we use:

- For instance, if someone has a

'Glucose'level of 150 and'BMI'of 25, their predicted probability of diabetes is 43.67%:

sigma = lambda t: 1 / (1 + np.e ** (-t))

sigma(-0.2547)

0.4366670090148261

- To find the optimal parameters $\vec{w}^*$, we minimize mean cross-entropy loss:

There's no closed-form solution for $\vec{w}^*$, so we use some numerical method (or, rather, sklearn does).

LogisticRegression in sklearn¶

- To illustrate, let's re-fit a model to predict diabetes from

'Glucose'and'BMI'insklearn.

from sklearn.linear_model import LogisticRegression

model_logistic_multiple = LogisticRegression()

model_logistic_multiple.fit(X_train, y_train)

LogisticRegression()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LogisticRegression()

- By default, the

predictmethod of a fitLogisticRegressionmodel predicts a class; it applies a threshold $T = 0.5$ to the predicted probability.

model_logistic_multiple.predict(pd.DataFrame([{

'Glucose': 150,

'BMI': 25,

}]))

array([0])

- We can access the predicted probabilities using the

predict_probamethod.

model_logistic_multiple.predict_proba(pd.DataFrame([{

'Glucose': 150,

'BMI': 25,

}]))

array([[0.56, 0.44]])

The decision boundary in the feature space¶

- After choosing $T = 0.5$, what does the resulting decision boundary look like, in a $d = 2$ dimensional plot?

util.show_decision_boundary(model_logistic_multiple, X_train, y_train, title='Decision Boundary when Using Both Glucose and BMI \n and T = 0.5 (the default)')

- Note that unlike the decision boundaries for $k$-Nearest Neighbors and decision trees, this decision boundary is linear. Specifically, it is the line:

- Important: Since $\sigma(0) = 0.5$, we can write the above as:

Question 🤔 (Answer at practicaldsc.org/q)

Which expression describes the odds ratio, $$\frac{P(y = 1 | \vec{x})}{P(y = 0 | \vec{x})}$$

in the logistic regression model?

- A. $\vec{w} \cdot \text{Aug}(\vec{x})$

- B. $-\vec{w} \cdot \text{Aug}(\vec{x})$

- C. $e^{\vec{w} \cdot \text{Aug}(\vec{x})}$

- D. $\sigma(\vec{w} \cdot \text{Aug}(\vec{x}))$

- E. None of the above.

Question 🤔 (Answer at practicaldsc.org/q)

Which expression describes $P(y = \mathbf{0} | \vec{x})$ in the logistic regression model?

- A. $\sigma\left(\vec{w} \cdot \text{Aug}(\vec{x}) \right)$

- B. $-\sigma\left(\vec{w} \cdot \text{Aug}(\vec{x}) \right)$

- C. $\sigma\left(- \vec{w} \cdot \text{Aug}(\vec{x}) \right)$

- D. $1 - \log \left( 1 + e^{\vec{w} \cdot \text{Aug}(\vec{x})} \right)$

- E. $1 + \log \left( 1 + e^{- \vec{w} \cdot \text{Aug}(\vec{x})} \right)$

Choosing a threshold¶

Thresholding¶

- As we've seen, in order to classify $\vec{x}$ as either yes ($y = 1$) or no ($y = 0$), we apply a threshold $T$ to the predicted probability.

and a predicted probability of 0.55 is classified as no diabetes (class 0).

More generally, if we pick a threshold of $T$, then any feature vector $\vec{x}$ such that:

$$\sigma(\vec{w}^* \cdot \text{Aug}(\vec{x})) \geq T$$

is classified as class 1.

- Question: How do we choose the "right" threshold?

sklearn's default threshold of $T = 0.5$ is not guaranteed to yield the highest accuracy!

Remember, to find $\vec{w}^*$, we minimized mean cross-entropy loss (that is, we didn't "maximize" accuracy), and mean cross-entropy loss doesn't involve our threshold.

Choosing a custom threshold¶

- If we want to use a custom threshold, we'll need to implement the logic ourselves.

def predict_thresholded(X, T):

'''Calls model_logistic_multiple.predict_proba.

For each P(y = 1 | x), returns 1 if >= T and 0 if < T.'''

probs = model_logistic_multiple.predict_proba(X)[:, 1]

return (probs >= T).astype(int)

- Now, we can choose any threshold we'd like, and compute the accuracy of the resulting predictions.

predict_thresholded([[150, 25]], 0.5)

array([0])

predict_thresholded([[150, 25]], 0.4)

array([1])

predict_thresholded(X_train, 0.4)

array([1, 1, 1, ..., 0, 0, 0])

# Training accuracy for the threshold T = 0.4.

(predict_thresholded(X_train, 0.4) == y_train).mean()

0.7659574468085106

Accuracy vs. threshold¶

- Accuracy is defined as:

- How does the model's training accuracy change as the threshold changes?

Note that we'd see a similar trend with test accuracy, too.

util.plot_vs_threshold(X_train, y_train, 'Accuracy')

- The threshold with the best training accuracy (among the thresholds we tried) is $T = 0.485$, which has a training accuracy of 77.8%.

- Remember that 65% of people in the dataset don't have diabetes, so we can achieve a 65% training accuracy just by always predicting "no diabetes"! This means that a good model's accuracy should be much higher than 65%.

pd.Series(y_train).value_counts(normalize=True)

Outcome 0 0.65 1 0.35 Name: proportion, dtype: float64

Metrics for binary classification¶

- A few lectures ago, we introduced other metrics for measuring the quality of a binary classifier's predictions.

- A binary classifier's confusion matrix displays its number of true positives ($TP$), false positives ($FP$), true negatives ($TN$), and false negatives ($FN$).

util.show_confusion(X_train, y_train, T=0.5)

- Remember, we're predicting whether or not patients have diabetes. Which is worse: a false positive or a false negative?

- Observe how the values in the confusion matrix change as the threshold changes!

interact(lambda T: util.show_confusion(X_train, y_train, T), T=(0, 1, 0.01));

Precision vs. threshold¶

Precision is defined as:

$$\text{precision} = \frac{TP}{\text{# predicted positive}} = \frac{TP}{TP + FP}$$

Here, a false positive ($FP$) is when we predict that someone has diabetes when they do not.

- How does the model's training precision change as the threshold changes?

util.plot_vs_threshold(X_train, y_train, 'Precision')

- If the "bar" is higher to predict 1, then we will have fewer positives in general, and thus fewer false positives.

- As the threshold increases ⬆️, the denominator in $\text{precision} = \frac{TP}{TP + FP}$ will decrease, and so precision tends to increase ⬆️.

There are some cases where a slightly higher threshold led to a slightly lower precision; why?

Recall vs. threshold¶

Recall is defined as:

$$\text{recall} = \frac{TP}{\text{# actually positive}} = \frac{TP}{TP + FN}$$

Here, a false negative ($FN$) is when we predict that someone does not have diabetes, when they really do.

- How does the model's training recall change as the threshold changes?

util.plot_vs_threshold(X_train, y_train, 'Recall')

- Note that the denominator in $\text{recall} = \frac{TP}{\text{# actually positive}}$ is constant. As the threshold increases ⬆️:

- true positives get converted to false negatives, so

- the numerator of recall ($TP$) decreases, and so

- recall decreases ⬇️.

Precision vs. recall¶

- We can visualize how precision and recall vary together.

util.pr_curve(X_train, y_train)

- The curve above is called a PR curve.

- Question: Given the information above, what threshold would you choose?

- Answer: The threshold whose point is closest to the top right corner of the plot above.

Why? The top right corner is where precision = 1 and recall = 1, and we want both to be high.

ROC curves¶

- A more popular variant of the PR curve is the ROC curve.

ROC stands for "receiver operating characteristic."

See here for a good discussion on the differences between PR curves and ROC curves.

- A ROC curve plots true positive rate (TPR) vs. false positive rate (FPR) for all possible thresholds, where:

- The ROC curve for our classifier looks like:

util.draw_roc_curve(X_train, y_train)

- If we care about TPR and FPR equally, the best threshold is the one whose point is closest to the top left corner in the plot above.

Why? The top left corner is where $TPR = 1$ and $FPR = 0$, and we want $TPR$ to be high and $FPR$ to be low.

- A common metric for the quality of a binary classifier is the area under curve (AUC) for the ROC curve.

Larger values are better!

Question 🤔 (Answer at practicaldsc.org/q)

What questions do you have about thresholds and logistic regression?

Linear separability¶

Feature space¶

- Suppose we're using $d$ features as inputs to our classifier. Consider a visualization of the features in $d$-dimensional space.

- Example: $d = 1$.

util.show_one_feature_plot_in_1D(X_train, y_train, thres=False)

- Example: $d = 2$.

util.make_two_feature_scatter(X_train, y_train)

- Note that in both plots above, there are orange points mixed in with the blue points!

Linear separability¶

- A dataset is linearly separable if a line, plane, or hyperplane can be drawn in $d$-dimensional space that perfectly separates the two classes.

- Example: $d = 1$.

util.lin_sep_1D()

util.non_lin_sep_1D()

- Example: $d = 2$.

util.lin_sep_2D()

util.non_lin_sep_2D()

- Why is the dataset below not linearly separable?

util.bad_example_1D()

Linear separability and decision boundaries¶

- By definition, if a dataset is linearly separable, then there exists a linear decision boundary that achieves 100% training accuracy.

util.lin_sep_1D()

- Above, any value of $c$ in $(120, 150)$ would make the decision boundary $$\text{Glucose} = c$$

achieve 100% training accuracy.

- Question: How do we find this decision boundary?

Logistic regression and linear separability¶

- Logistic regression, without regularization, fails to converge on linearly separable data!

- Let's re-draw the plot below, but with diabetes status drawn on the $y$-axis.

util.lin_sep_1D()

- Why would the optimal $w_1^*$ below tend to $\infty$?

See the annotated slides for more details.

util.lin_sep_1D_elevated()

- To prevent this, logistic regression should always be regularized.

Multiclass classification¶

From binary to multiclass classification¶

- In binary classification, there are only two possible classes, typically either 0 or 1.

- In multiclass classification, there can be any finite number of classes, or labels. They need not be numbers, either.

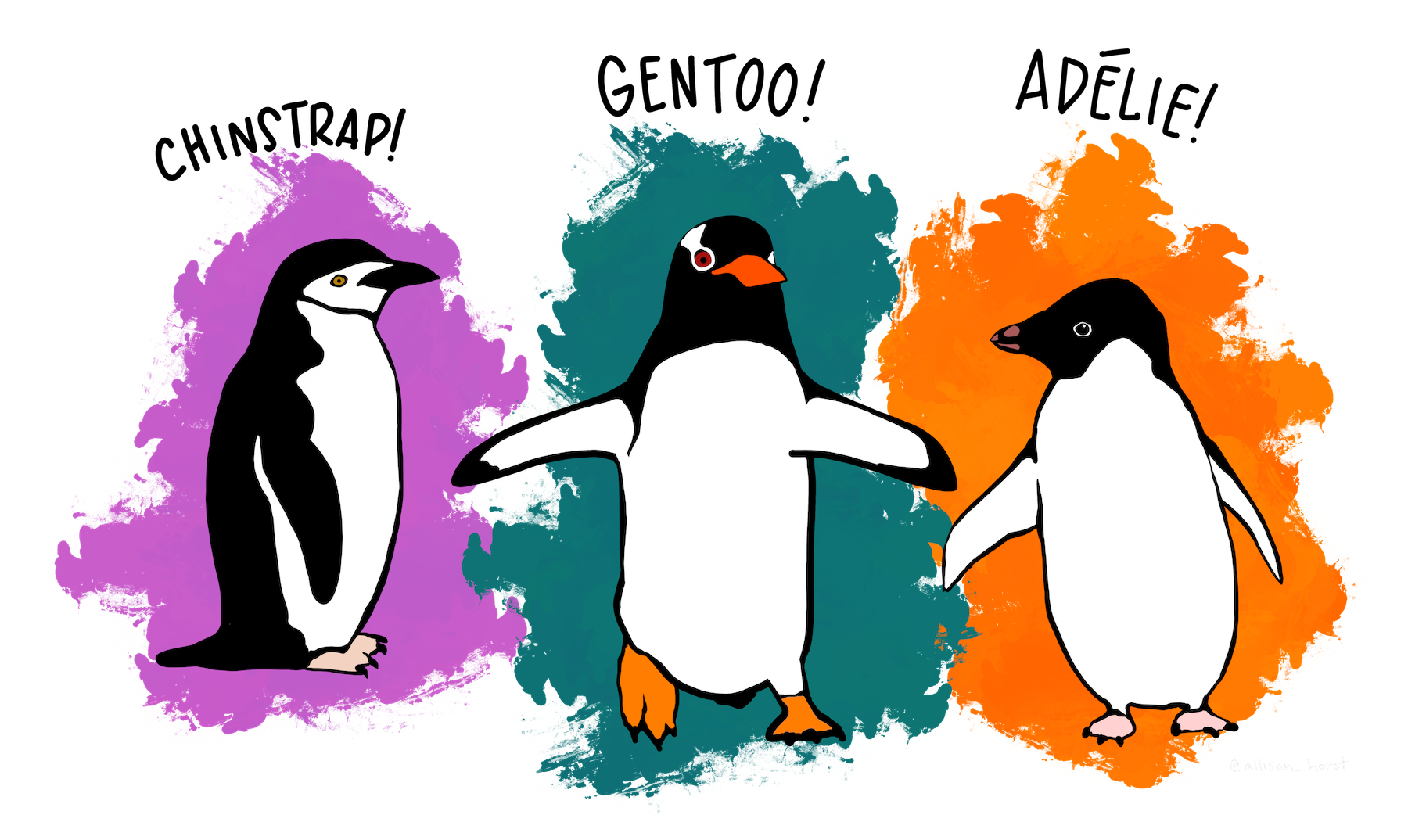

Return of the penguins!¶

Artwork by @allison_horst

Artwork by @allison_horst

To illustrate multiclass classification, we'll revisit the Palmer Penguins dataset we saw earlier in the semester.

Loading the data¶

penguins = sns.load_dataset('penguins').dropna().reset_index(drop=True)

penguins

| species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | |

|---|---|---|---|---|---|---|---|

| 0 | Adelie | Torgersen | 39.1 | 18.7 | 181.0 | 3750.0 | Male |

| 1 | Adelie | Torgersen | 39.5 | 17.4 | 186.0 | 3800.0 | Female |

| 2 | Adelie | Torgersen | 40.3 | 18.0 | 195.0 | 3250.0 | Female |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 330 | Gentoo | Biscoe | 50.4 | 15.7 | 222.0 | 5750.0 | Male |

| 331 | Gentoo | Biscoe | 45.2 | 14.8 | 212.0 | 5200.0 | Female |

| 332 | Gentoo | Biscoe | 49.9 | 16.1 | 213.0 | 5400.0 | Male |

333 rows × 7 columns

- Here, each row corresponds to a single penguin.

- There are three

'species'of penguin: Adelie, Chinstrap, and Gentoo.

penguins['species'].value_counts(normalize=True)

species Adelie 0.44 Gentoo 0.36 Chinstrap 0.20 Name: proportion, dtype: float64

- Question: What accuracy would the best "constant" classifier achieve on this data?

Visualizing the data¶

- Visually, it seems that the

'species'are penguins are mostly separated based on their physical characteristics ('bill_depth_mm','bill_length_mm', and'body_mass_g').

util.penguin_scatter_3d(penguins)

- For simplicity, we'll work with just two features:

'bill_length_mm'and'body_mass_g'.

fig = util.penguin_scatter_2d(penguins)

fig

- But first, a train-test split.

X_train, X_test, y_train, y_test = train_test_split(penguins[['bill_length_mm', 'body_mass_g']],

penguins['species'],

random_state=26)

Classifier 1: $k$-Nearest Neighbors 🏡🏠¶

- Recall, suppose we're given a new penguin, $\vec{x}_\text{new} = \begin{bmatrix} \text{Bill Length}_\text{new} \\ \text{Body Mass}_\text{new} \end{bmatrix}$.

- The $k$-Nearest Neighbors classifier ($k$-NN for short) classifies $\vec{x}_\text{new}$ by:

- Finding the $k$ closest points in the training set to $\vec{x}_\text{new}$.

- Predicting that $\vec{x}_\text{new}$ belongs to the most common class among those $k$ closest points.

- This approach doesn't depend on the number of classes, meaning that we can directly use a $k$-NN classifier for multiclass problems.

fig

KNeighborsClassifier in sklearn¶

from sklearn.neighbors import KNeighborsClassifier

- Let's use the default of $k = 5$.

Of course, in practice, we should cross-validate.

model_knn = KNeighborsClassifier(n_neighbors=5)

model_knn.fit(X_train, y_train)

KNeighborsClassifier()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

KNeighborsClassifier()

- There are now three colors in the decision boundaries.

util.penguin_decision_boundary(model_knn, X_train, y_train, title="Decision Boundary when k = 5")

Classifier 2: Decision trees 🎄¶

- Recall, suppose we're given a new penguin, $\vec{x}_\text{new} = \begin{bmatrix} \text{Bill Length}_\text{new} \\ \text{Body Mass}_\text{new} \end{bmatrix}$.

- The decision tree classifier classifies $\vec{x}_\text{new}$ by:

- Asking a series of yes/no questions about $\text{Bill Length}_\text{new}$ and $\text{Body Mass}_\text{new}$, e.g.:

Is $\text{Bill Length}_\text{new} \leq 43.25$? 2. Once it runs out of questions to ask, it predicts that $\vec{x}_\text{new}$ belongs to the **most common class** among training set points that had the same answers as $\vec{x}_\text{new}$.

If so, is $\text{Bill Length}_\text{new} \leq 41.55$?

If not, is $\text{Body Mass}_\text{new} \leq 4125$?

$\vdots$

- This approach also doesn't depend on the number of classes, meaning that we can directly use a decision tree classifier for multiclass problems.

DecisionTreeClassifier in sklearn¶

from sklearn.tree import DecisionTreeClassifier

- Let's fix

max_depth=3so that we can visualize the resulting tree.

Again, in practice, we should cross-validate.

model_tree = DecisionTreeClassifier(max_depth=3)

model_tree.fit(X_train, y_train)

DecisionTreeClassifier(max_depth=3)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

DecisionTreeClassifier(max_depth=3)

- Note that colors below don't directly match the colors in the scatter plot earlier.

from sklearn.tree import plot_tree

plt.figure(figsize=(13, 5))

plot_tree(model_tree, feature_names=X_train.columns,

class_names=['Adelie', 'Chinstrap', 'Gentoo'],

filled=True, fontsize=10, impurity=False);

util.penguin_decision_boundary(model_tree, X_train, y_train, title="Decision Boundary for a Decision Tree\nwith Depth = 3")

Classifier 3: Logistic regression 📈¶

- Logistic regression models the probability of belonging to class 1, given a feature vector:

- Our formulation of logistic regression only worked in the context of binary classification, i.e. when $y_i \in \{0, 1\}$.

- Can we still use logistic regression somehow, now that we have three classes?

"One vs. rest" logistic regression¶

- One approach: Build three separate logistic regression models, each of which treat the problem as binary.

- One that predicts the probability of class Adelie, vs. not Adelie.

Here, $y=1$ means "Adelie" and $y=0$ means "not Adelie". - One that predicts the probability of class Chinstrap, vs. not-Chinstrap.

- One that predicts the probability of class Gentoo, vs. not-Gentoo.

- One that predicts the probability of class Adelie, vs. not Adelie.

- For a new penguin $\vec{x}_\text{new}$, compute all three probabilities, and predict the class with the highest predicted probability!

- This technique is called one-vs-rest.

from sklearn.multiclass import OneVsRestClassifier

model_logistic_ovr = OneVsRestClassifier(LogisticRegression())

model_logistic_ovr.fit(X_train, y_train)

OneVsRestClassifier(estimator=LogisticRegression())In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

OneVsRestClassifier(estimator=LogisticRegression())

LogisticRegression()

LogisticRegression()

util.penguin_decision_boundary(model_logistic_ovr, X_train, y_train, title="Decision Boundary for a Decision Tree\nwith Depth = 3")

- Note that the resulting decision boundaries are still linear!